Let’s first understand about storage family, features and types in IT Landscape before diving into cost, performance vs. management complexities. In the current storage landscape, enterprises and personal devices mostly have spinning drive in the hard Drive, whether it is about server, laptop or Desktop. SSD (Solid State Drive) doesn’t have mechanical part and comes with small form factor as compared to with traditional HDD. DAS – Direct Attached Storage needs to be administered separately for each server, so it is not so convenient for data transfer. iSCSI is better to transfer data between devices than Fiber Channel from distance standpoint, former uses IP protocol whereas latter is all physical Fiber Media, However, it is not so fast as FC. Magnetic Tape is better from capacity perspective, however not a modern solution for quick data recovery.

Let’s take a contrast between NAS vs SAN: NAS is the Storage attached to a network; servers connected in network may have one/two storage servers. Any machine or Server can connect to NAS storage (having RAID device) to Tape for archival purpose. This can use SCSI, as this is faster than Ethernet, next version of SCSI will cut overhead to increase data throughput.

Only server class devices with SCSI Fiber Channel can connect to SAN, distance can go up to 10 KM.File systems are managed by NAS unit head whereas server takes care of the same in SAN. Backups and mirroring are done on files in NAS than blocks in SAN, hence gets better bandwidth and time savings and mirror machine will need less space than the source as compared to the SAN.

It is less Convenient to move large data block movement as compared to SAN. NAS is designed following file based protocol e.g. NFS, FTP, CIFS etc. (NAS is basically a file server) that get connected through IP network protocol. Typical use cases for NAS are share files, centralized logging and home directory. RDBMS (Oracle etc.), Server Virtualization solution VMware vCenter, VMWare VDI.

FlashSystem Array is another storage product by vendors like IBM that delivers cost efficient storage solution primarily in cloud due to less power consumption, less heat, less space.

Consolidation, Standardization and Automation are the mantra for optimal storage solution, Henceforth any IT solution encompassing tools and services must look at tangibility of the improvement in all these three area to yield efficiency and capability. SDDC delivers capability to manage storage by the virtue of single pane of management window.

Social media, Mobile and its usage such as BYOD, Mobile business Applications etc., Analytic – Advance Analytic and Cloud Solutions (Giving strong cost efficient support for new SMEs) are driving the need to grow storage footprint exponentially and structure service segment optimally. The need is further resonated due to IoT (Internet of Things) evolution that is being forecast to connect more than 40 billion devices while generating 40% of 40 ZB of data by 2020. While this data growth doesn’t add up cost proportionally with the growing need of storage (Thanks to technology innovation and competition), but limited space in DC, Power consumption, cooling processing, growing operational cost, data management complexity etc.. Create pressure to optimize storage assets.

Apart from operation priorities such as Integrated back up and DR function, I/O performance, automated storage management, support VM migration, storage tiering function , thin provisioning functionalities etc. top and long term priority is to be emphasized upon ease of management, Scalability, Space optimization etc. Business back bone is to have the ability in infrastructure and application ecosystem to derive analytic and actionable information from raw data to help sustain or grow the business by making smart storage as one of the most essential infrastructure requirement in this era.

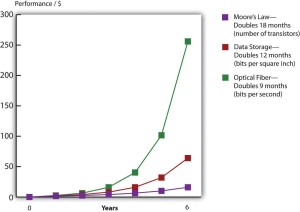

Today enterprise locks down suitable storage platform for its Big Data, unstructured workloads etc. in the design phase by making a choice between file and object based, identifying a single access mechanism to feed data into the platform, making a choice between data to compute or otherwise, creating separate storage infrastructure islands for different workloads such as Hadoop, Search, Analytic etc. In addition to all applications and related operational related workloads enterprises also have to factor storage footprint for Back Up, Archival, DR process etc. and all that leads into infrastructure fragmentation while lifting up cost and increasing management complexities. These complexities are multiplied in the cloud ecosystem from storage management standpoint. Some of the innovative solutions by some leading organizations are looking at ways to enable applications in the back up site rather than restore data and turn applications on post DR, use one central storage system for all of Archival, DR and Back Up process etc. These are some of the solutions that will simplify the complexities and cost while growth of data is doubling every year per moore law of storage.

Moore Law of Storage

Conventional storage standard is getting converted to open standard by conceiving EDLP (Enterprise Data Lake Platform) that would allow protocols from file based viz. NFS, FTP, CIFS, pNFS to object based (RESTful) while increasing the complexity in the whole of storage ecosystem. While EDLP is evolving and need to support multiple access point viz. open, standard based or application specific, this leads into metadata being more complex and extensible to support all variants in the features and standard. Solution from deep storage EDLP also adds up complexities in maintaining low dollar cost per IOPS for data ranging from high to less frequency.

In the summary, need of the hour is to take various factors such as multitude of solutions offered by vendors, cost, Performance, ease of management, capacity planning while aligning with business etc. into account and design a robust storage solution. It could hybrid of things such as NAS, SAN cluster, Cloud (Private vs Hybrid vs Public), DAS, software defined storage, storage hypervisor to manage and configure storage optimally etc. It is worth doing time investment upfront than spending days of effort to fix storage related issues.