Business and respective IT units need to speed-up time to market and adapt to evolving market demands to stay competitive in digital economies. This requires an underlying agile IT with a data center infrastructure that helps to boost the efficiency in which IT is serving business whilst reducing Capex and Opex. We started from dedicated devices designed to support specific application or business process to shared environment to cloud set up while decoupling hardware and devices through software from processes to render IT services in an efficient manner. Whatever phase we are in, it’s temporary and we need to get ready for the next phase that we don’t know precisely. Change is the only constant element that agitates and fuels up current IT ecosystem to go to next stage of evolution. This has brought us to a stage to render services using software by creating myriad of abstraction layers around physical components such as CPU, storages, networks etc. I would like to emphasis challenges at hand while summarizing journey we have had so far in IT infrastructure evolution along with changing IT skill landscape that could probably lead us to think about the next phase of evolution in each of fundamental component such as compute, storage, network or security.

Business and respective IT units need to speed-up time to market and adapt to evolving market demands to stay competitive in digital economies. This requires an underlying agile IT with a data center infrastructure that helps to boost the efficiency in which IT is serving business whilst reducing Capex and Opex. We started from dedicated devices designed to support specific application or business process to shared environment to cloud set up while decoupling hardware and devices through software from processes to render IT services in an efficient manner. Whatever phase we are in, it’s temporary and we need to get ready for the next phase that we don’t know precisely. Change is the only constant element that agitates and fuels up current IT ecosystem to go to next stage of evolution. This has brought us to a stage to render services using software by creating myriad of abstraction layers around physical components such as CPU, storages, networks etc. I would like to emphasis challenges at hand while summarizing journey we have had so far in IT infrastructure evolution along with changing IT skill landscape that could probably lead us to think about the next phase of evolution in each of fundamental component such as compute, storage, network or security.

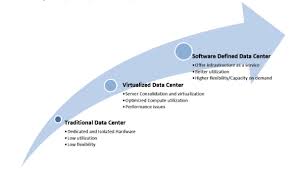

Challenges and SDDC Evolution: Our current infrastructure is the result of design approach that targeted specific business function while following linear methodology for capacity forecast in terms of number of jobs per second during peak time, data growth at the rate of 2 to 5% per month, subscriber growth rate at certain percentage or similar. Traditionally, high level business need would translate into technical requirement and specifications that would drive down to the number of CPUs, Storage, security authentication and NW bandwidth requirements comprising systems from AS400 to MF to distributed following multitier architecture connecting various components in IT fabric. When volumetric and projected growth do not manifest as envisaged, this method of sizing infrastructure’s compute and storage could lead to either under sizing or oversizing the footprint. Often, such islands of infrastructure compute and storage leads to underutilization of resources. This has a cascading effect on investment and the effort expended toward energy consumption, management overheads, software licenses and data center costs.

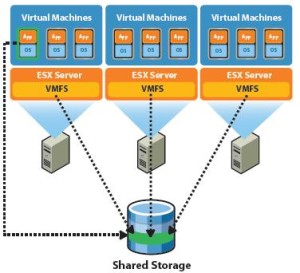

Shared Resources: Shortcoming of previous model led to conceive the idea of shared and virtualized model to increase efficiency and utilization. IT department would put applications into various categories in line of work load and business lines, for example back end financial application would reside into MF system equipped with high security and faster processing power whereas all online CRM , ERP etc. applications would reside in the combined distributed platform running Linux , Window OS including middleware component such as MQ series, JMX etc. Hypervisors, such as ESX, NSX etc. would abstract underlying hardware resources such as server, Compute, storages and networks etc. into virtual machines as guest machines with different OS and desired NW attributes to run application loads while utilizing host components at maximum. This model has its advantages in terms of how resources are efficiently utilized in ideal application workloads. However, when one or more application workloads begin to consume more resources than expected, scenarios could arise where several guest operating systems are short of compute resources, thereby impacting business application service level agreements. Challenge in this model has been the lack of dynamisms. When one or more application workloads begin to consume more resources than expected, scenarios could arise where several guest operating systems are short of compute resources, thereby impacting business application service level agreements. We met shared resources capability with the help of programs/software to a great extent. The challenge of processing demand from dynamic workload is being addressed in the Cloud computing model involving IaaS (Infrastructure as a service) as delivery model.

Programs/Software can be leveraged to provision servers with adequate resources to meet dynamic requirement of applications. Through automation, self-service, orchestration and metering ability, the success of enterprise server virtualization has prompted IT department to extend this capability across the data center, with programs controlling the hardware. However, we need to think beyond compute elements to storage and network in order to experience a composite effect from server virtualization. Hardware resource constraint shifts to storage IO and Network bandwidth and we need to leverage software as well in these units to make them as elastic as server virtualization.

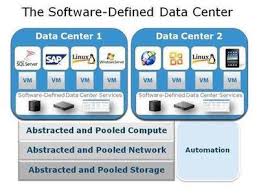

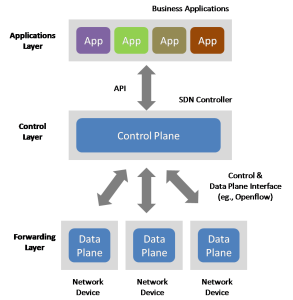

Software defined data center (SDDC) is the way forward to digitalize data center to support digital economy. SDDC is to enable each essential component of DC through software to bring flexibilities and elasticity to meet dynamic IT service requirement. As Forrester defines “SDDC is a comprehensive abstraction of a complete data center.” SDDC is about controlling the bare metal with an ability to turn services on and off, thereby shrinking or expanding resources to meet a defined level of service assurance. The mechanisms to achieve this lie in the ability to abstract the hardware layer and provide compute services as virtual resources to the applications seeking them through Server virtualization, Storage virtualization(Software defined storage – SDS) and Network virtualization (software-defined networking – SDN). The potential impact of SDDC products is immense, offering an integrated architecture that allows the merger of legacy architectures, cloud computing, and workload-centric architectures into a single manageable domain.

Server virtualization has become pretty much mainstream component in cloud set up or Data Center, We have to work in designing and implementing workable solution for SDN and SDS. All in all, SDDC concept appears great relative to infrastructure flexibility, elasticity and thereby potential benefits to digitalization drive, but these are all in theory until the time IT (along with established vendor partners) department come up with workable solution integrating with existing hardware, legacy set up such as AS400, MF etc. while addressing security elements involving CIA (Confidentiality, Integrity and availability).

We could see some movement in the space of realizing SDDC such as ODL (Open Day Light project) https://www.opendaylight.org/ where a set of companies have defined a common goal of establishing an open-source framework for software-defined networking (SDN). This enables IT players to instantiate network while keeping the basic features of NW such as IP filtering, packet forwarding etc. while isolating the network segment and removing device dependencies. Similarly storage is also undergoing virtualization processing while keeping storage pool including modern and legacy units and creating an abstraction layer on top of it (SDS – Software defined storage). IT players have been able to map abstraction layers on HW devices regardless its level of maturity. Future state of SDDC is to have all HW components managed, controlled and governed by software while giving ease to the administrator and running the day to day operation by automated orchestrated job workflow such as provisioning of VM, vRAM, vIO, vCPU, firewall policy, vLAN and many more. SDDC must take care of all mechanical and energy requirement in a DC such as cooling system, adequate power supply, Recycle process etc. along with intelligence and analytical set up etc. involving AI (Artificial Intelligence and Robotics process) and IoT. Whichever area requires less analytical intervention are the candidates for automation. IoT involves object to object, object to edge, object to server, edge to server etc. communication with lot of intelligence and varied protocol with adequate security in place. This area is still evolving but SDDC can leverage some aspect of IoT such as integrating thermal, cooling system with thermostat, inter DCs (DR/BCP) objects communication as fall back mechanism and many more. SDDC needs to be enabled with self-healing features by accumulating events and respective responds in a DWH while enriching knowledge base itself from event-response transaction and taking action and course correction on its own.

Skill and Roles

Virtualization and the cloud revolution have brought unprecedented levels of agility and automation to IT infrastructure and data centers around the globe. As a result, the changing nature of the data center architecture, moving from physical to virtual to software defined set up, requires a shift in various IT roles and skills within IT department. IT can spend less time on operations and more time building highly efficient applications, leading to radical changes for traditional IT roles and responsibilities across network, security, and operations. With the evolution of SDDC, IT team requires to work collaboratively while converging and blending with network, storage, security and compute requiring more generalists than specialists as compared to working in silo and old traditional structure in line of domains previously. Please take a look into my previous blog relevant to evolving skill trend @ http://www.techmanthan.com/index.php/2015/07/03/trend-in-it-skill-genralists-vs-specifics/. SDDC brings up business and IT closer to each other and this is what required in the era of propelling digital economy. Information security team will need to embrace security element that goes into the design of virtualization involving firewall policy, IP filtering mechanism. SDDC will be overwhelmed with virtualization scripts or hypervisors across all hardware components require technicians to be skillful to understand and embrace scripting or coding language to work in the heterogeneous environment. Legacy system needs to stay integrated with common fabric with the help of plugins, API or programs. IT team needs to be adept at scripting languages such as PowerCLI, PERL, Python etc. to set up and administer automation, which is very crucial to orchestrate work flow for operational activities e.g. VM provisioning, configuration management etc.

Summary

Cloud delivery such as IaaS (Infrastructure as a service) is just a component of SDDC. Additional integration layers between legacy hardware, data center facilities and an all-encompassing monitoring and management stack are among the other key tenets the SDDC. Digital economy @ http://www.techmanthan.com/index.php/2015/09/20/digital-infrastructure-platform-to-support-digital-economy/ is all about giving an immersive and integrative platform for end users, business team and IT players to keep ideating and exploring innovative products & solutions with the help of each other. SDDC is the gateway for Digital infrastructure to keep up with the pace of propelling digital economy. Digital economy and Digital Infrastructure complements each other and are inseparable in terms of one innovation in an area can’t be realized without support from other. IT and business partner need to adopt SDDC in a controlled manner without wasting too much of prior investment in HW and legacy systems. Software is the key integrator to connect all HW and devices and realize the benefit from SDDC.